Master Data Management | Three-step process to fix MDM

Master Data can shut your organization down! Below is a case study of a $2.8B life-sciences manufacturer company operating their business on an outdated, poorly managed ERP (enterprise resource planning) system and steps taken to provide an effective and efficient business processing environment. To help you grasp MDM HDPlus offers master data management strategy help.

The project started in March of 2014, and after an initial analysis and a technical point-of-view project it became evident that the company had major process issues in addition to poor ERP data quality. The organization was a private equity carve out from an international giant in the photographic sector that had fallen from worldwide market dominance, a bankruptcy and selling off divisions, the parent company was a mere shadow of itself years past. During this dramatic decline brought about by the digital camera technology explosion, oddly, the parent company was one of the first pioneers of digital photography, yet tabled the technology in favor of higher margins manufacturing film, the parent company has continued to struggle.

The project started in March of 2014, and after an initial analysis and a technical point-of-view project it became evident that the company had major process issues in addition to poor ERP data quality. The organization was a private equity carve out from an international giant in the photographic sector that had fallen from worldwide market dominance, a bankruptcy and selling off divisions, the parent company was a mere shadow of itself years past. During this dramatic decline brought about by the digital camera technology explosion, oddly, the parent company was one of the first pioneers of digital photography, yet tabled the technology in favor of higher margins manufacturing film, the parent company has continued to struggle.

Eventually private equity became interested and purchased the $2.8B international health division from the parent company. After the purchase was announced, and the carve-out began, the IT team simply made a backup copy of the parent companies ERP, old data and the 7,000 customizations made to ERP system were transferred to the new company. In a complete lapse of forward thinking decisions were made to just ignore master data, the result, little to no data cleansing ensued, and after 7 more years of running ERP with no focus on master data, it was finally realized that running the organization on incomplete, miscoded, and riddled with duplicate records to circumvent the lack of ERP functional knowledge, this just doesn’t work.

The ERP system the company inherited was a collection of over 7,000 customizations, and locked the company into a functional limitation of version 4.7 (and in some areas of the code 3.1) when the ERP’s current release is 6.0. In addition, after years of RIFs at the parent company, being sold, the knowledge of ERP and how to use it functionally was in short support in the company. The company was limping along with a multi-million dollar investment achieving only minimal business benefit.

With such a disastrous system a redeployment was a consideration, but limitation on funding quickly eliminated this possibility, the company hasn’t deployed archiving ether due to funding issues. In addition to everything else, 7+ years of data included an unknown amount of data from the parent corporation still spinning on disk, still being backed up, and getting in the way of running the company.

The initial analysis of the problem was done on a domain by domain basis. During the process we discovered close to 39% of duplication in the customer tables, incomplete records, grossly miscoded records thanks to built-in pull-down menus, some with over 200 items, and all outdated. When selling medical equipment does the category option of “toy store” or “smoke shop” make sense?

Another major contributor was the manual data entry process. Using antiquated software such as Lotus Notes hundreds of master data entry forms had been created. With security nearly none existent, anyone in the company that had a Lotus Notes login ID, could go to the electronic forms section, find the customer record creation form, and create a master record. This was also the case for vendors and material.

When the record reached one of the three shared services groups (all in low cost countries), the focus was on how quickly the record could be entered into the ERP system. The team was measured on SLA, not quality. Every master record completed required at least two manual entries: first the record was manually entered into Lotus Notes, and later manually entered into the ERP system. We discovered a scenario at one of the French facilities where the data was touched three times! First in a CRM database, then printed out, handed to a data entry person who reentered the data into Lotus Notes, then in one of the shared service centers, for a third time, entered into the ERP system.

Three (3) manual entry points!

We also discovered other master data problem areas such as: vendor data whereby vendor records were created with only two fields – company name and tax number. Employee records where the same employee has two job classifications, and material records with multiple catalogue numbers assigned to the same physical part, and so on.

Was there a path forward?

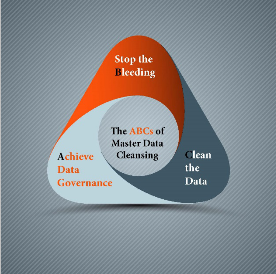

After careful analysis of the problem source(s), and there were several, a master data clean-up approached was developed and launched by the MDM team:

1. Establish Data Governance

2. Stop the Bleeding

3. Clean the Data

Data Governance – good data governance requires development of the overarching strategy of how data governance will be applied to the process, linked to tactical data governance documentation that provides detailed instructions of how to create and modify data. The combination of strategic and tactical data governance documents should fully document every process step from data inception to data retirement. Documenting data standards, archiving, and the approval process all along the way.

Also MDM training and establishment of a master data council must be created. The master data council comes into play as an escalation path should master data issues arise during creation. The escalation process should begin with a subject matter expert, in this case a Data Steward, these individuals should be part of the business, and fully understand the impact of the data on the business. The Data Steward should be part of a hierarchy with the Data Trustee as the next level up, and at the top of the hierarchy are the business leaders and the Master Data Officer. These are executives empowered to make decisions regarding the disposition of data that impacts the company. Key point here is not to allow this responsibility to migrate into the IT space, IT does not own the data, but acts as the custodian of the data, it is the business that owns the data, and the business must be involved.

Stop the Bleeding – stop creating more bad data. Educate, train, and deploy tool automation should all come into play at this juncture to ensure employees fully understand the impact of poor data quality, in addition, automate the process defined in the data governance. Develop automation that adds a level of security, allowing only authorized individuals to create and/’or modify master data, workflow rules that ensure each data record is complete, and that categories are consistent and appropriate, last using a fuzzy-logic search, test for potential duplicate records already present in the ERP system before creating another record. The new record should be created in a test or QA instance and not in the production instance, once all process steps are complete (…and approved), the record can then transferred to the production instance.

Automating the process where possible can add the needed security, and apply the proper logic to ensure records are correctly classified, complete, data standards enforced, and that the record is checked for duplication.

Cleansing the Data – Once the data governance is in place and the creation of new data under control, then cleansing the existing data can be addressed.

The first step to cleansing is to profile the existing data and fully understand what issues are present with the data. Wrong categorization, incomplete, duplication, outdated records, etc. Grouping the data by this criteria breaks the task of data cleansing large amounts of data into manageable sections of data. Eventually the data profiling will reach a stage where the data stewards/trustees need to be engaged to validate data categories, in the case of duplicate records which record should be the gold copy, define the creation data rules, and how to consolidate accompanying transactions. Keep in mind that in most ERP systems, once a transaction record is logged against a master data record simply consolidating the records is no longer an option, the transaction records must also be modified and pointed to the correct gold copy, and the duplicate master data record marked as “blocked.”

When the data is fully profiled, all corrections documented, the data modifications should be tested in a development or QA environment that mimics the production environment. End-to-end testing is critical of all cleansed data and should be done before the cleansed data is moved into production.

Restoring the quality of master data is a significant task, and of equal importance is putting the processes in place to keep the data clean with good data governance and automated procedures.

The master data cleansing module in HDPlus can help you automated much of the above processes; including building the data governance model and documentation, approvals, and fuzzy search capability. HDPlus MDM provides a dashboard gauging the condition of master data at all times.

We offer consulting to deploy the HDPlus MDM module, from initial deployment, training, or on-going support. With HDPlus MDM you can replicate automated master data cleansing processes that cost millions when purchased from the large ERP companies, or you can spend orders of magnitude less by using the HDPlus MDM solution. Our team can provide the training, launch your MDM cleanup process, and then provide you support as needed.